Abstract: To ensure a successful and boring go-live, it is critical to ensure your data is cleansed and business-ready from the start. Data cleansing will be key to minimizing issues during the implementation process and ensuring that data is working properly, leading to a smooth transition.

By now, all companies using an ERP should be aware that the correctness, completeness, integrity and up-to-dateness of the master data are THE key to a smooth and error-free use of the ERP system. This was the case in SAP R/2 as well as in R/3 and now in S/4HANA it is still the case.

The following anecdote may illustrate this:

A good colleague of mine does management training for companies that use SAP. He essentially wants to teach the managers two aspects, firstly a basic understanding of cross-functional integration and secondly raising awareness of the importance of master data. For this reason, at some point in the middle of the workshop, he makes himself comfortable on his chair, opens the newspaper and starts reading. He does this until he is asked what he is doing there now. His answer is then: I’m just showing you what work looks like at an SAP workstation when the master data is in order.

Automated processing

And that is exactly how it is. The SAP ERP system in particular can handle entire business processes end-to-end in large parts, fully automatically and without manual intervention, if the master data is correspondingly complete and correctly maintained. If this is not the case, the company usually spends unbelievable resources on remedying this situation with permanent operational interventions in the individual transactions or other workarounds. These activities are usually meaningless and mindless, and do not add one iota of value to the business. So take the opportunity of the conversion to S/4HANA and eliminate this deficit! In the following, I will show you how to proceed sensibly and what tools and support are needed for this!

In the projects I managed in the first ten years, I always had to focus on the topic of data cleansing and data migration as a project manager, and immense efforts of critical key users flowed into this area in order to manually cleanse data and maintain it consistently and with integrity in several sources until it was finally migrated … and then mostly with an unsatisfactory result because something was lost or overlooked somewhere. I myself had already familiarized myself deeply with the possibilities of LSMW and had completely designed the SAP-side part of the migration myself in many projects. All in all, however, far too much effort always went into this area and the results were unsatisfactory.

This changed in 2007 when, as part of a project in Cairo, I came across a company specializing in SAP data migration that approached the whole issue in a completely different way. I was working in the project as an integration manager and was very surprised when the sub-project manager of the data migration told me that from now on I could give him three days’ notice if I wanted the integration client to be loaded with the complete productive data set for the integration test. I thought he was joking, but in the end that’s exactly what happened and the data quality in the integration system was excellent six months before go-live – in all aspects.

This impressed me so much at the time that I intensively studied the procedure and the toolset (middleware) (which is now listed by SAP on the official price list as SAP Advanced Data Migration (ADM)).

Here are the key success factors from my point of view:

- The topic of data migration is handled in an evolutionary prototyping approach – the principle “Load often – Load all” applies

- Once all data sources have been identified and connected to the middleware, the data in the legacy system is firmly under the control of the data migration team and all cleansing measures necessary for formal data quality and sufficient for the business readiness of the data are initiated, monitored and reported by the team at field level.

- The methodology is simple, catchy and it is ensured that if the employees stick to it, no volatile mistakes will happen

- It is possible to “fake” data that has not yet been cleansed for a load according to certain rules, i.e. to be able to load it without losing sight of the cleansing measure that continues to be necessary.

- Source systems (SAP R/3 and others) and target system (S/4HANA) are quasi-permanently connected; the source data is loaded into the staging area of the middleware, where it is converted based on rules and checked for conformity with the system configuration (customizing) loaded from the target system – disharmonies are of course reported

- The toolset has a number of built-in logics and tools that effectively support data cleansing, such as:

- Automated exclusion of all inactive records (those that are not transferred) from future cleansing activities

- Identification of duplicate records and consistent merging of all dependent data to the defined winner (the record that will be taken over)

- Ensuring the formal and content-related consistency of the complete data stock to be loaded (e.g. that the suppliers / materials / info records and source lists are available for all orders to be transferred, if this is necessary according to the settings made and data maintained, or the same also for all data relevant in production)

- Automated transfer to new data structures in S/4 (the prime example of this is the merging of customers/customers and suppliers/creditors into the new unified data object Business Partner

- Simple web-based interfaces for the data processing required by the business, such as determining the “winner” in the case of duplicate master data and also the enrichment of data records (e.g. with fields that do not yet exist in the legacy system; incl. various upload options), including the option to correct all decisions made again

- Tracking and tracing for every activity that takes place automatically or manually in the toolset and thus automated creation of audit-proof documentation of the complete data migration (master data, transaction data and historical data sets that are transferred)

and thus allow the business users involved to focus exclusively on the decisions that must be made by them in connection with the data migration and not to waste valuable time on banal data cleansing tasks.

- This approach also ensures that manual data entry in the project, especially for testing purposes, can be reduced to a minimum, as the data can be made available in good quality in each client at an early stage; of course, this requires a correspondingly early start of the data migration workstream – my recommendation is to include this completely from the outset in the project planning, with the involvement of an appropriate competent partner (I can gladly provide recommendations on this); only then will you also derive the maximum benefit from this.

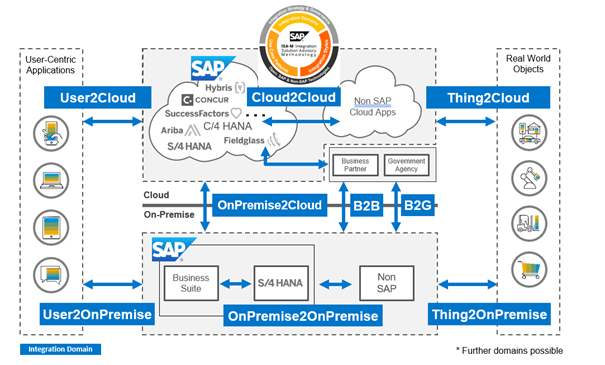

The basic architecture of such a solution looks like this:

Based on the experience and knowledge gained at that time, I convinced the customer to call in an appropriate migration partner in the projects I managed, whenever they had a significant challenge in the area of data cleansing / data migration. In particular, I have also had the following experience: According to my experience, the migration partner plans and manages the data migration workstream completely independently, including all related planning, risks and issues – THAT MEANS FROM THE PROJECT MANAGEMENT’S AND THE CUSTOMER’S VIEWPOINTS, THE MOST CRITICAL FACTOR OF THE SAP IMPLEMENTATION IS IN DRY TIPS AND THERE CAN BE A MUCH GREATER FOCUS ON OTHER TOPICS. And that’s a very good thing. Because all the organizational change management usually requires much more attention and time by the key users involved, but I won’t digress here.

Here are some more recommendations on how to proceed with data cleansing to make it as effective as possible:

Distinguish between independent and dependent data:

- Independent data can be entered into the SAP system without the need for a reference data object; these are obviously essentially the material master, the business partner master (customers/vendors/creditors) and the G/L account master.

- Dependent data is all the data objects that, in order to create them in the SAP system, need a reference object (independent or dependent); a bill of material needs a material master, a routing likewise, a production version needs a bill of material and a routing, a purchasing info record needs a vendor and a material, and so on.

Structure the whole data cleansing processing into the following steps and work through them sequentially if possible:

- Active Record Determination: Determine which master records should be transferred to the new system; Examples; All vendors/customers with open items, open orders or purchase orders (this is virtually a must); All materials used in the last two years, etc.⇒ In this step, focus on the independent data, since the dependent data are implicitly classified as such.⇒ ALL FURTHER CLEANSING ACTIVITIES ARE OF COURSE ONLY CARRIED OUT FOR ACTIVE RECORDS, THIS STEP IS SO IMPORTANT BECAUSE IT ESSENTIALLY DETERMINES THE SCOPE OF THE DATA TO BE FURTHER CLEANSED.

- Required Field Content: Ensure that all fields to be used in S/4 in the future (primarily required fields and preferably also optional fields) can be filled according to the field definition/conversion rules⇒ focus on the independent data again in this step, since for each independent data set that cannot be loaded due to missing data, various dependent ones are also lost; of course, it is still relevant for all

- Formal Field Content: Ensure that all fields to be used in S/4 in the future (primarily required fields and preferably also optional fields) are filled in correctly in terms of form in accordance with the field definition/conversion rules; this means, for example, that there is a number in the planned delivery time, whether this is correct in terms of content is only of secondary relevance.⇒ focus on the independent data again in this step, since for each independent data set that cannot be loaded due to missing data, various dependent ones are also lost; of course, it is still relevant for all⇒ When this cleansing step is done, the data load is in a quality that should be sufficient for an integration test

- Business Readiness: Ensure that all fields to be used in S/4 in the future (primarily required fields and preferably also optional fields) are filled with correct content in accordance with the field definition/conversion rules; this means, for example, that the number in the planned delivery time in the material is correct in terms of content and leads to correspondingly correct control of the planning in the ERP that is dependent on it⇒ While steps 2 and 3 can be “faked”, this is not possible for this step; here, the business must check the data and, ideally, approve it by signature.⇒ IN THE MEANTIME, IN MANY COMPANIES THAT HAVE BEEN USING SAP ERP FOR YEARS, I HAVE UNFORTUNATELY NOTICED THAT THE EMPLOYEES INVOLVED IN PLANNING ARE OFTEN NOT REALLY AWARE OF THE INTERRELATIONSHIP OF ALL THE FIELDS INVOLVED IN ERP CONTROL, NOR OF THEIR INDIVIDUAL FUNCTIONS AS SUCH. FROM MY POINT OF VIEW, THIS IS A FATAL SITUATION, WHICH MUST BE REMEDIED IN ADVANCE OF THIS CLEANSING STEP, BECAUSE OTHERWISE THE FUNCTIONALITY OF THE NEW ERP IS DIRECTLY CONTROLLED IN THE WRONG WAY AND ERGO LEADS TO WRONG RESULTS. IT WOULD HELP TO PROVIDE AN OVERVIEW OF THE CONCRETE INTENDED USE OF ALL FIELDS INVOLVED IN THE PLANNING (SEE EXAMPLE) AND ALSO AN ASSESSMENT FOR ALL EMPLOYEES AND MANAGERS INVOLVED IN THE PLANNING IN ORDER TO DETERMINE THEIR LEVEL OF KNOWLEDGE/TRAINING REQUIREMENTS IN THIS REGARD (I CAN GLADLY PROVIDE IMPLEMENTATION RECOMMENDATIONS ON SEPARATE REQUEST).⇒ When this cleansing step is complete, the data load is of a quality that will be sufficient for user acceptance testing and a boring go-live

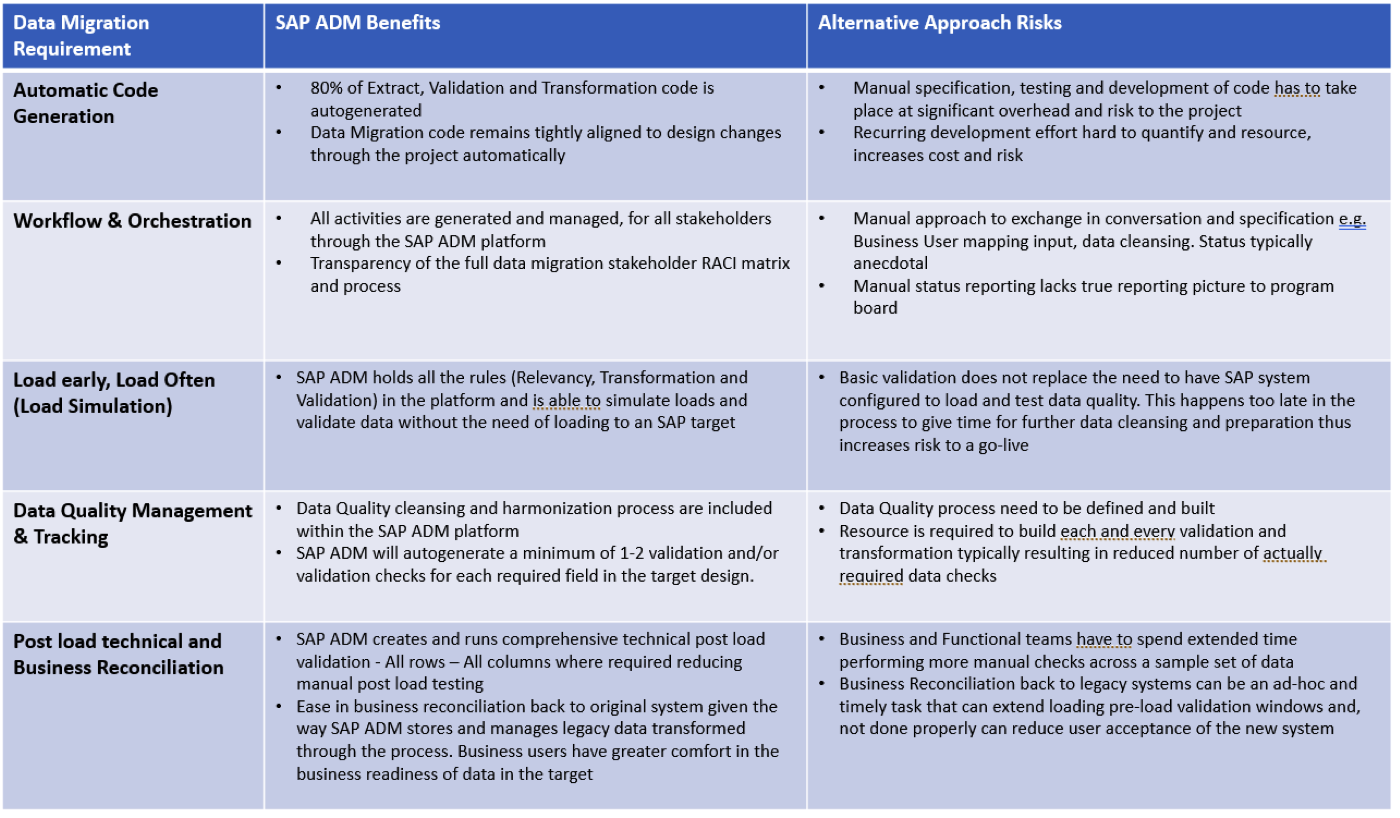

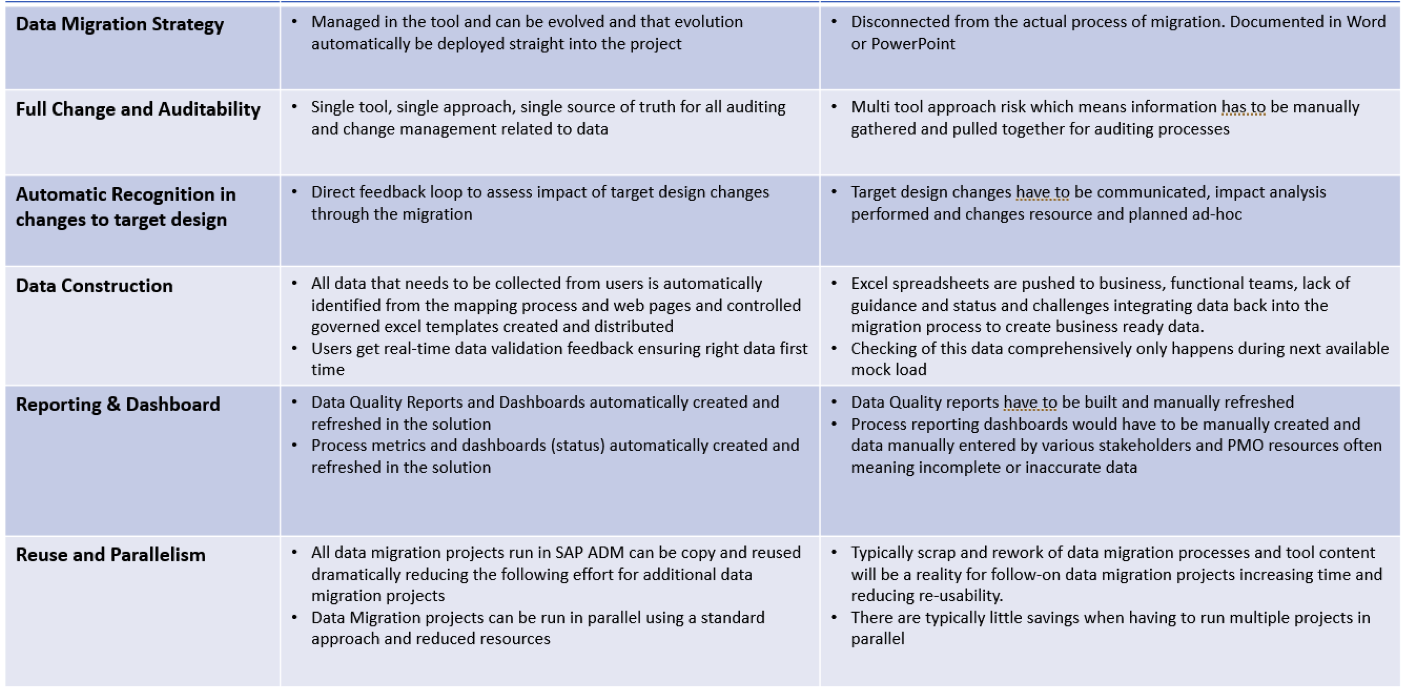

I have gained data migration experience over the past 12 years as a project manager working closely with different vendors and their toolsets – from my point of view, SAP ADM in combination with the engagement of a competent partner offers the most complete solution portfolio

Here is an aggregate overview of the benefits and implied risks of most other approaches:

Source: Syniti

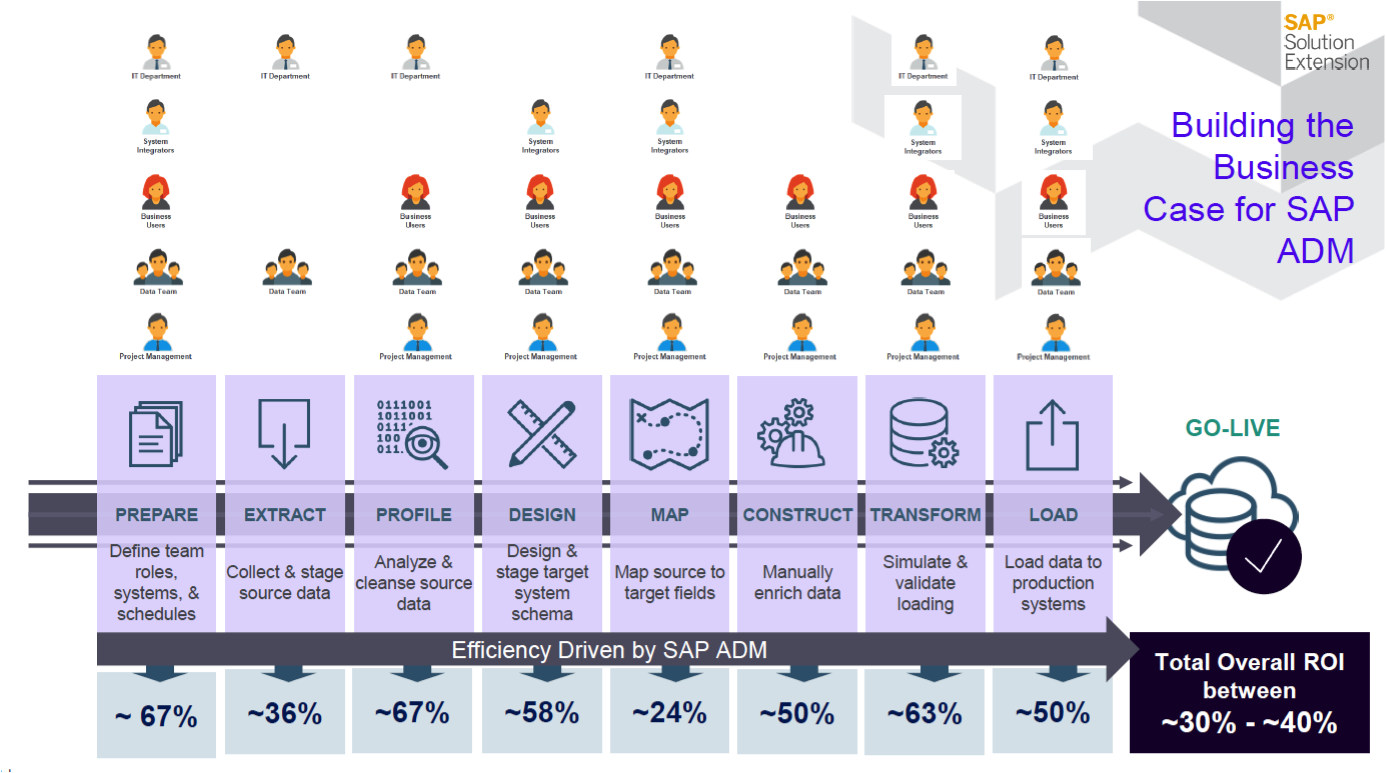

It is certainly the case that using SAP ADM with an appropriately competent partner will be a massive budget block, so here is an overview of the potential savings:

Source: Syniti

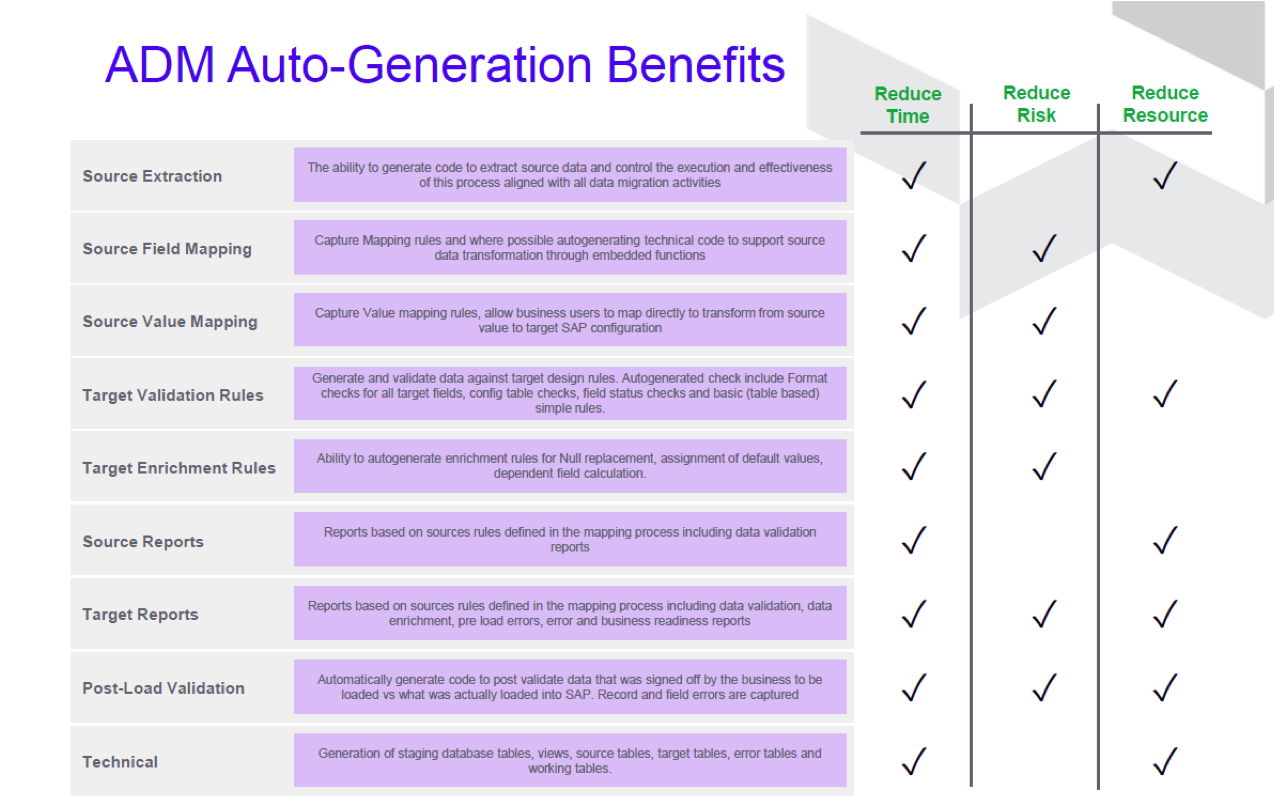

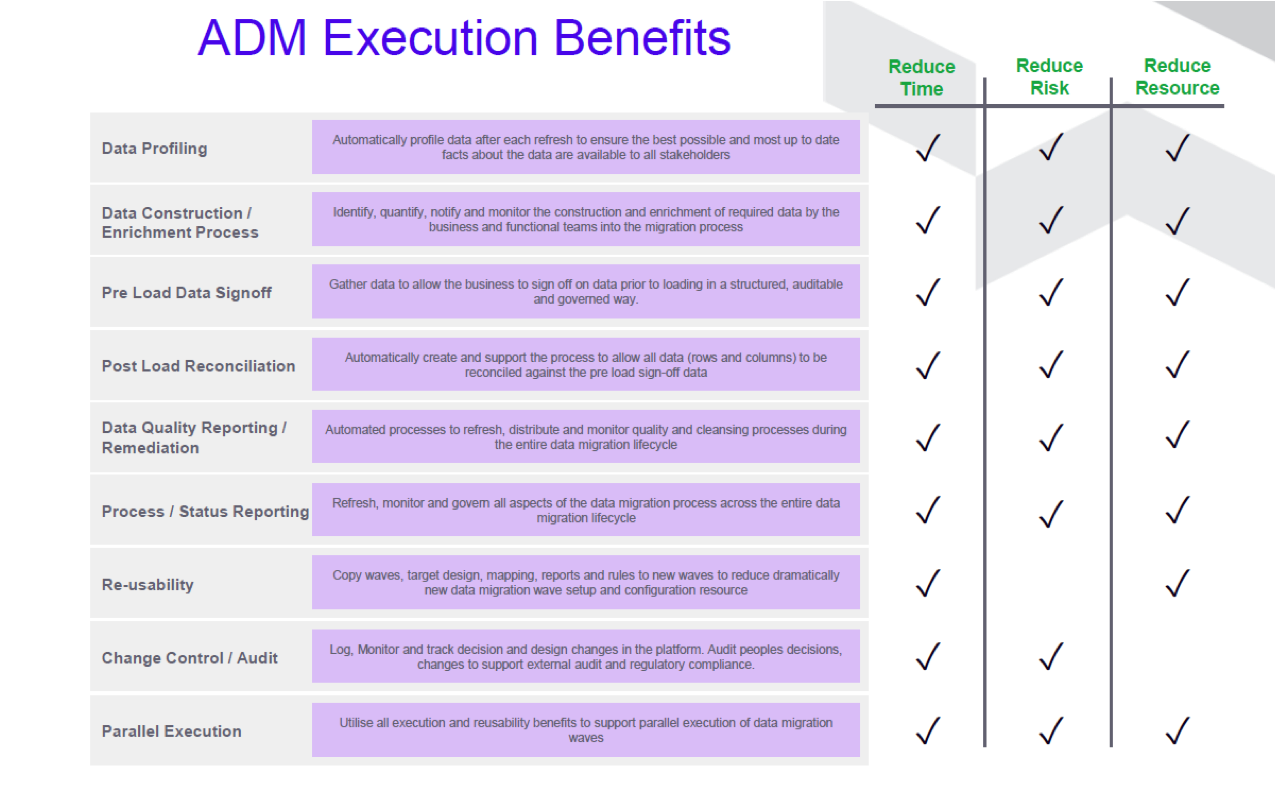

As well as an overview of the impact on risks, resources and project duration:

Source: Syniti

I am also happy to share with you on request my made experiences with other similar toolsets and the partners involved in each case. For more information, please feel free to visit the following websites:

https://www.syniti.com/solutions/data-migration/#sap-users

or also

An overview of our own tools for project management can be found here!